Our journal article on data science workflows published in EMSE.

A new paper in Empirical Software Engineering Journal!

Workflow analysis of data science code in public GitHub repositories

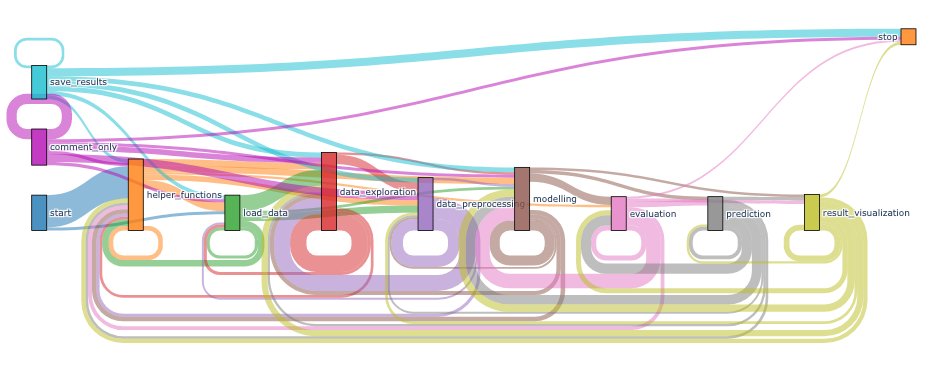

Understanding how data science solutions are implemented is necessary to create tools that assist data scientists. While solutions typically follow the workflow of data collection to insights, literature hints that they are explorative and non-linear.

In this work, we study real-world data science workflows to understand their characteristics in detail. Our work provides further empirical evidence for the non-linearity and elaborates on the interactive patterns in these workflows. The subjects of our study are data science workflows implemented through one of the popular development environments, Jupyter. Jupyter allows data scientists to create and share the workflows in the form of computational notebooks that contain a sequence of cells allowing for the logical separation of code blocks.

To conduct the analysis, 1) we first propose a way to characterise and identify different steps of a data science workflow implemented in computational notebooks. 2) We then develop an expert-annotated dataset of 470 notebooks, DASWOW, where each code cell is annotated based on the data science step they perform. 3) Finally, using DASWOW, we provide deeper empirical insights into data science workflows. Particularly, we provide evidence that these workflows are indeed iterative and reveal specific patterns. 4) Further, we also show that DASWOW can be extended through supervised learning methods to perform large-scale analysis.

Read more at “Workflow analysis of data science code in public GitHub repositories”.

The dataset DASWOW is available for open access.